CCN Schedule

Tuesday, August 6, 2024

- 2:00pm Competition Findings (Martin Schrimpf)

- 2:15pm Behavioral Benchmark Winners

- 1. Do Neural Networks Care How Parts are Arranged? (Nick Baker, James Elder)

- 2. Brain-Score on Dotted Line Drawings and Textured Silhouettes (Marin Dujmović, Gaurav Malhotra, Jeff Bowers)

- 2:30pm Neural Benchmark Winners

- 1. Strong amodal completion of occluded objects across early and high-level visual cortices (David Coggan)

- 2. The Animate Space of Inanimate Perception (Andrea Ivan Costantino)

- 3:00pm Pros and Cons of Model Benchmarking (Kohitij Kar)

- 3:10pm Panel Discussion (Jenelle Feather, Dan Yamins, Kohitij Kar, Ev Fedorenko, Jeff Bowers, Eero Simoncelli; moderated by Jim DiCarlo)

Common critiques of the Brain-Score platform

Testing on the same data over and over again can help modelers game the system.

Why should we trust the Brain-Score rankings on these hand-picked benchmarks?

We need to test models on specific hypotheses, not observational data.

Why should models only hit specific benchmarks? I don't care about these benchmarks!

These and others are common critiques in our community of the Brain-Score platform. We hear you!

We take pride in developing and making openly available an integrative benchmarking platform that allows the community to operationalize and actively engage on this and other feedback. In 2022, we organized the first Brain-Score competition for model submissions in which we evaluated the models on our existing benchmarks. In 2024 — we have turned the table! This year, in the spirit of an adversarial collaboration — we invite experimentalists and the community at large to turn their legitimate concerns into concrete benchmarks that will challenge and hopefully expose the explanatory gaps between our current models of primate vision and the biological brain.

2024 Brain-Score Competition

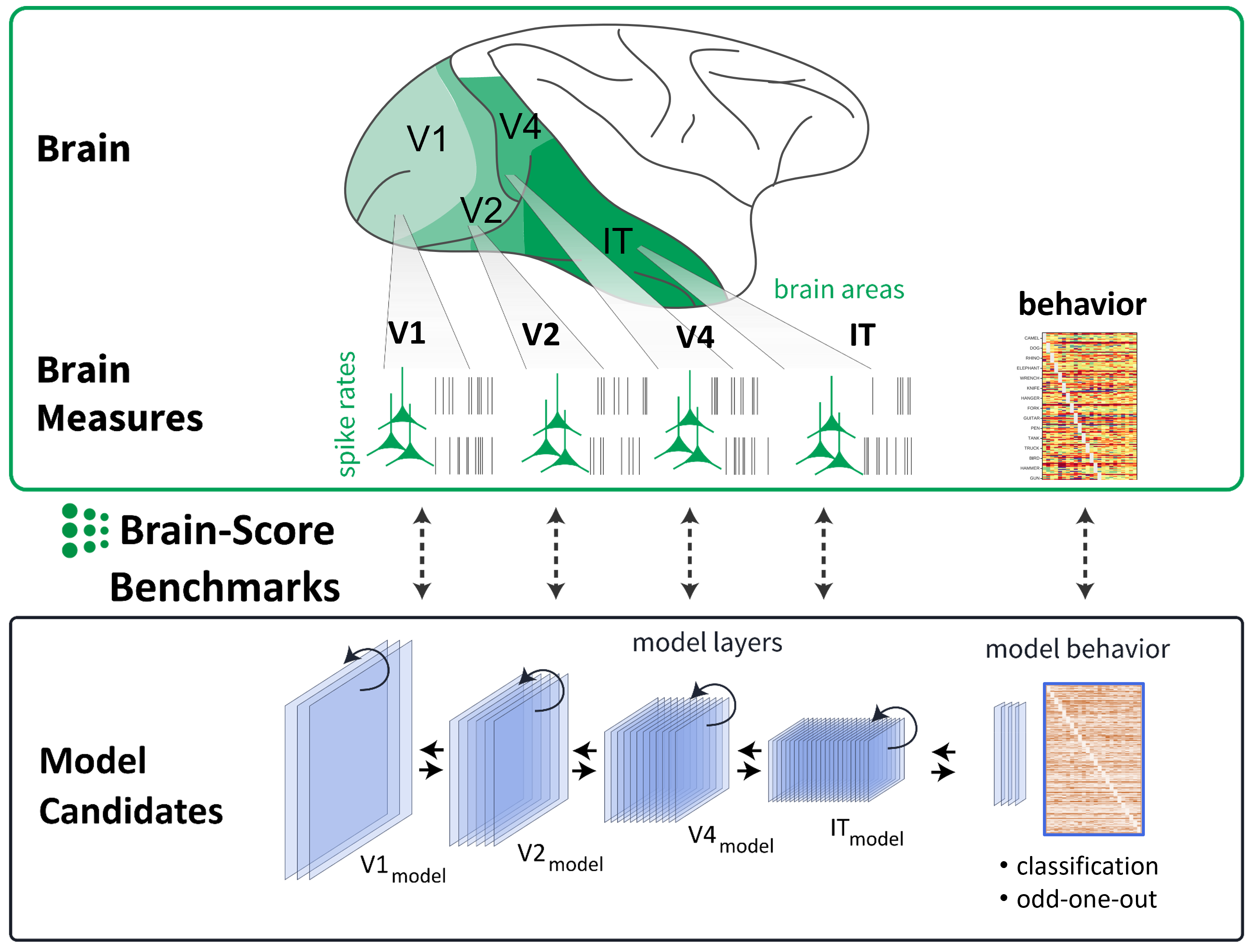

Our ability to recognize objects in the world relies on visual processing along the ventral visual stream, a set of hierarchically-organized cortical areas. Recently, machine-learning researchers and neuroscientists have produced computational models that have achieved moderate success at explaining primate object recognition behavior and the neural representations that support it. This second edition of the Brain-Score Competition aims to find benchmarks on which the predictions of our current top models break down.

In 2022, the first Brain-Score Competition led to new and improved models of primate vision that predicted existing benchmarks reasonably well. In this year's Brain-Score Competition 2024, we will close the loop on testing model predictions by rewarding those benchmarks that show where models are the least aligned to the primate visual ventral stream. The Competition is open to the scientific community and we provide an infrastructure to evaluate a variety of models on new behavioral and neural benchmarks in a standardised and unified manner. In addition, we will incentivize benchmark submissions by providing visibility to participants and a $10,000 prize pool to the winning benchmarks.

Submissions are open until June 30 July 8, 2024. For regular updates related to the competition, please follow Brain-Score on Twitter and join our community. Good Luck!

Overview

Participants should submit their benchmarks through the Brain-Score platform. Brain-Score currently accepts any benchmark engaging on testing model predictions of core object recognition behavior and neural responses (spike rates) along the ventral visual stream areas. We encourage all submissions of behavioral and neural benchmarks. To facilitate benchmark submission, we provide helper code and tutorials. You can also use existing datasets or metrics and re-combine them in novel ways. Note that by using the Brain-Score platform, no model knowledge is required -- you can treat them like another primate subject.

Benchmarks have to use models in their current "operating regime". This means that there are three behavioral tasks (imagenet-labeling, probabilities, odd-one-out), and four brain regions to record from (V1, V2, V4, IT). Until April, we will accept suggestions of other behavioral tasks provided you work with us to make sure the models can engage on them. The stimuli should be static images (e.g. no videos this round). In this round, benchmarks should not test temporal dynamics (but you can still specify a single "time_bin" from the models to record from.)

Competition Tracks

Behavioral Track

This track will reward those benchmarks that show-case model short-comings in

predicting behavior (human or non-human primate).

The winning submissions will be the behavioral benchmarks with the lowest model scores,

mean-averaged over all models, i.e. as close as possible to 0.

The benchmarks can target any behavioral task that the models engage on

(labeling, class probabilities, odd-one-out; see the

model

interface).

1st Prize: $3,000,

2nd Prize: $2,000.

Neural Track

This track will reward those benchmarks that show-case model short-comings in

predicting neural activity across the primate visual ventral stream.

The winning submissions will be the neural benchmarks with the lowest model scores,

mean-averaged over all models, i.e. as close as possible to 0.

The benchmarks can target any region(s) in the visual ventral stream: V1, V2, V4, IT

(see the model

interface).

Brain recordings can be from human or non-human primates.

1st Prize: $3,000,

2nd Prize: $2,000.

Models

-

hmax -

alexnet -

CORnet-S -

resnet-50-robust -

voneresnet-50-non_stochastic -

resnet18-local_aggregation -

grcnn_robust_v1(Top-3 competition 2022) -

custom_model_cv_18_dagger_408(Top-3 competition 2022) -

ViT_L_32_imagenet1k -

mobilenet_v2_1.4_224 -

pixels

Workshop

We will organize a workshop as part of the CCN conference at MIT in Cambridge, MA in early August 2024. This workshop will serve to bring the community interested in testing and building models of brain processing together.

We will invite selected participants in the Brain-Score competition to present their benchmarking work during the workshop.

Tutorial

To enter the competition, create an account on the Brain-Score website and submit a benchmark. You can submit a benchmark by sending in a zip file on the website. For new datasets, you can either host yourself or reach out to us and we will give you access to S3. Please check our overview tutorials as well as our full length tutorial for detailed information about the submission process.

We tried to make our tutorial as clear and easy to follow as possible for anyone with minimum Python knowledge. However, if you have any issues, feel free to contact us!

Organizers

Martin Schrimpf

EPFL

Kohitij Kar

York University

Contact

We recommend that participants join our Slack Workspace and follow Brain-Score on Twitter for any questions, updates, benchmark support, and other assistance.

FAQ

load_model function.