Let's Dive Deeper!

One of the biggest benefits Brain-Score has to offer is integrative benchmarking, in which a user can submit a model and see how it performs on many benchmarks, not just a single one. Oftentimes this leads to new insights and correlations, as well as interesting findings.

In this first Deep Dive, we will download a sample Brain-Score submission (containing the model ResNet-50), and explore the parts of its submission package. You can then base your own unique submissions on our sample project.

Note: Please do not submit the tutorial file itself via the website. The following walkthrough is just to let you become familiar with how submissions should be structured.

Part 1: Install Necessary Packages

If you have not already, we highly recommend completing the Quickstart section above before we start to explore our ResNet-50 model submission package. Although completion is not required, the Quickstart will give you a nice background into what model scores mean, and how to score a model locally on a single, publicly available benchmark.

If you have already done the Quickstart, skip to step 2 to the right. Otherwise, visit please here to install the necessary packages for Brain-Score.

Part 2: Download the Starter Zip File:

Brain-Score allows users to submit plugins in two ways: directly to the site, via a Zip file upload, or through a Github PR. In this first Deep Dive, you will explore a sample model submission in order to become familiar with the submission package structure. You can download the sample zip file here. The sample Zip file contains a properly formatted, stock version of ResNet-50 pretrained on ImageNet.

In Deep Dives 2 and 3, we will look at a custom model submission, as well as prepare to submit a model via Github Pull Request respectively.

Part 3: Exploring the Starter Submission File

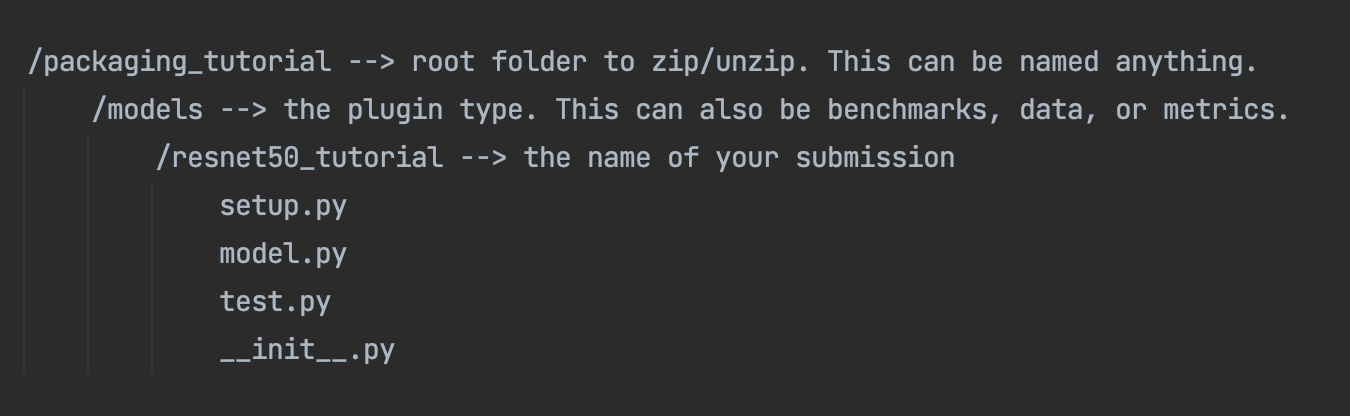

After unzipping the packaging_submission.zip file, you will see that the root folder is called packaging_tutorial. This in turns contains another folder for the plugin itself, in this case /models. This /models folder then contains a folder called /resnet50_tutorial. This final also contains 4 other Python files. The overall structure of the submission package is shown below:

Just like the sample submission, each submission that you submit must contain at least the 2 required Python files: __init__.py and test.py. The other two files, setup.py and model.py, are optional, but will be explored below along with the two required files. Let's explore further with the first required file, __init__.py file.

Note: this would also be the same overall structure as your submission package, or if you are submitting any other plugins (benchmarks, metrics, or data). Feel free to base your future submissions off of this package, just please do not submit the resnet50_tutorial model.

Part 4: Exploring the __init__.py file

This file adds your plugin to the Brain-Score plugin registry and to the Brain-Score ecosystem at large. You are registering your model to a unique global identifier. You can see that this file is fairly straightforward. Lines 1-3 are standard imports, and line 5 adds the plugin to the registry. In the future, when you submit models (or other plugins), you will add the plugin to our ecosystem via line 5.

1 from brainscore_vision import model_registry

2 from brainscore_vision.model_helpers.brain_transformation import ModelCommitment

3 from .model import get_model, get_layers

4

5 model_registry['resnet50'] = lambda: ModelCommitment(identifier='resnet50', activations_model=get_model('resnet50'), layers=get_layers('resnet50'))

Please note: Brain-Score does not allow duplicate plugin names, so if you submit another version of the same model, make sure to make the identifier unique!

Also note: It would be prohibitively time- and resource-consuming to actually load every plugin in a registry before they are needed, so the plugin discovery process relies on string parsing. This unfortunately means that it’s not possible to programmatically add plugin identifiers to the registry; each registration needs to be written explicitly in the form of plugin_registry['my_plugin_identifier'] Next, let’s check out the second required file, test.py file.

Part 5: Exploring the test.py File

You will notice that the submission's test.py file is blank. This is a temporary status, as the Brain-Score Team is currently writing a suite of tests that can be incorporated into this file, that every model will run.

For now, if you are submitting a model yourself, you can just leave this file blank, but still make sure to include it in your submission package.

Part 6: Exploring an (optional) setup.py File

This file is where you can add any requirements that your model needs. You can do this by simply adding the packages to the in the requirements dictionary on lines 6-8. If your model had any extra requirements, such as a specific version of a package or an external git repository, you would add it to the list defined on line 6. Lines 10 - 25 are just administrative definitions, and can be left untouched.

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3

4 rom setuptools import setup, find_packages

5

6 requirements = [ "torchvision",

7 "torch"

8 ]

9

10 setup(

11 packages=find_packages(exclude=['tests']),

12 include_package_data=True,

13 install_requires=requirements,

14 license="MIT license",

15 zip_safe=False,

16 keywords='brain-score template',

17 classifiers=[

18 'Development Status :: 2 - Pre-Alpha',

19 'Intended Audience :: Developers',

20 'License :: OSI Approved :: MIT License',

21 'Natural Language :: English',

22 'Programming Language :: Python :: 3.7',

23 ],

24 test_suite='tests',

25 )

Note: The reason why setup.py is optional is that you can also use a requirements.txt or pyproject file to add any other requirements that you might need. If your model needs no extra packages, you can exclude this from the submission package completely.

Part 7: Exploring an (optional) model.py File

This file is usually where the model itself is defined. The reason why this is optional is that using model.py is a convention we use to separate code, but that is not needed. It is also plugin-specific. We think it is good practice, however, to use a separate model.py for models, to keep things neat.

Let’s explore this file in more detail: Lines 1 - 9 are standard imports. Lines 20-21 define the get_model_list() function that simply returns the model identifier. Next, lines 24-30 define the get_model() function that gets the model itself. You can see on line 26 the model itself is being loaded from pytorch. If you have a custom model that you have created yourself, check out our Custom Model Submission Guide here in Deep Dive 2. Lines 33 - 35 contain the get_layers() function, which returns the layers you are interested in scoring.

1 from brainscore_vision.model_helpers.check_submission import check_models

2 import functools

3 import os

4 import torchvision.models

5 from brainscore_vision.model_helpers.activations.pytorch import PytorchWrapper

6 from brainscore_vision.model_helpers.activations.pytorch import load_preprocess_images

7 from pathlib import Path

8 from brainscore_vision.model_helpers import download_weights

9 import torch

10

11 # This is an example implementation for submitting resnet-50 as a pytorch model

12

13 # Attention: It is important, that the wrapper identifier is unique per model!

14 # The results will otherwise be the same due to brain-scores internal result caching mechanism.

15 # Please load your pytorch model for usage in CPU. There won't be GPUs available for scoring your model.

16 # If the model requires a GPU, contact the brain-score team directly.

17 from brainscore_vision.model_helpers.check_submission import check_models

18

19

20 def get_model_list():

21 return ['resnet50']

22

23

24 def get_model(name):

25 assert name == 'resnet50'

26 model = torchvision.models.resnet50(pretrained=True)

27 preprocessing = functools.partial(load_preprocess_images, image_size=224)

28 wrapper = PytorchWrapper(identifier='resnet50', model=model, preprocessing=preprocessing)

29 wrapper.image_size = 224

30 return wrapper

31

32

33 def get_layers(name):

34 assert name == 'resnet50'

35 return ['conv1','layer1', 'layer2', 'layer3', 'layer4', 'fc']

36

37

38 def get_bibtex(model_identifier):

39 return """xx"""

40

41

42 if __name__ == '__main__':

43 check_models.check_base_models(__name__)

Lines 38 and 39 define the Bibtex for the model. You can leave this blank when submitting, but we highly recommend you add a reference. Finally, lines 42 - 43 define a call to the Brain-Score scoring system to score our model locally on the MajajHong2015public.IT-pls benchmark.

Part 8: Putting it All Together

You are almost done! If you were actually submitting a model, the final step would be to run your model locally, to ensure that everything is in working order. You can do this by running the model.py file itself. Please note that this can take ~5-10 minutes on a 2023 M1 Max MacBook Pro, so your run times may vary. Once ran, this should produce the message below, indicating that you are ready for submission:

Test successful, you are ready to submit!

Once you receive this message, you could rezip (after you save everything, of course) your package, and you would be good to submit your model.

When you submit an actual (non-tutorial) model, you will receive an email with your results within 24 hours (most of the time it only takes 2-3 hours to score). If you would like to explore a custom model's submission package, please visit the next Deep Dive here.

Our tutorials and FAQs, created with Brain-Score users, aim to cover all bases. However, if issues arise, reach out to our community or consult the troubleshooting guide below for common errors and solutions.